Why Some SSDs Keep Their Cool (and Speed) Under Pressure

Started copying a huge file to your NVMe, only to see the transfer rate plummet after seconds? Those blazing advertised speeds last for a moment, then...

Ever started copying a massive file – maybe a multi-gigabyte game install or a big video project – to your speedy NVMe SSD, only to watch the transfer rate plummet after the first few seconds? You see the blazing advertised speeds for a moment, then... disappointment. The progress bar starts crawling.

What gives? Your drive isn't necessarily faulty. This slowdown is often a normal consequence of how modern consumer SSDs cleverly balance cost, capacity, and speed. But understanding why it happens reveals why some drives maintain impressive performance even when pushed hard, while others falter.

The Flash Memory Trade-Off: Speed vs. Density

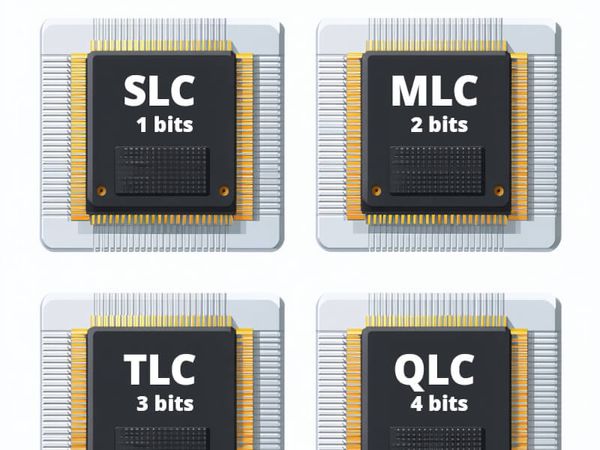

At the core of your SSD are NAND flash memory cells, storing your data. There are different types, but the most common ones in consumer drives involve a trade-off:

- SLC (Single-Level Cell): Stores 1 bit per cell. Fastest, most durable, but most expensive and lowest density (capacity).

- MLC (Multi-Level Cell): Stores 2 bits per cell. Slower than SLC, less durable, but denser and cheaper.

- TLC (Triple-Level Cell): Stores 3 bits per cell. Slower still, less durable than MLC, but even denser and cheaper. (Very common today).

- QLC (Quad-Level Cell): Stores 4 bits per cell. Slowest native speed, lowest endurance, but highest density and lowest cost. (Found in many budget/high-capacity drives).

Manufacturers want to give you lots of capacity affordably, so they primarily use TLC or QLC NAND. But they also want those headline-grabbing speeds. How do they bridge the gap?

The SLC Cache: A Temporary Speed Boost

The magic trick is SLC caching. Most TLC and QLC drives designate a portion of their NAND flash to operate in a faster SLC mode (writing only 1 bit per cell instead of 3 or 4).

Think of it like an express lane or a small, super-fast temporary holding area. When you write data, it goes straight into this speedy SLC cache first, giving you those fantastic initial burst speeds you see advertised.

This cache can be:

- Static: A fixed, small portion of the NAND always reserved for SLC mode.

- Dynamic: Uses available free space on the drive as an SLC cache, potentially offering a much larger cache size when the drive is empty, but shrinking as it fills up. Many drives use a hybrid approach.

Hitting the Cache Wall: Why Speeds Drop

This caching works brilliantly for small, bursty writes – launching apps, saving documents, browsing. But what happens during that massive file copy?

Eventually, the SLC cache fills up. The drive now has two problems:

- It can't accept new data into the fast cache anymore.

- It needs to clear out the cache by moving the already-written data from the SLC portion to the slower, main TLC or QLC storage area.

Suddenly, the drive is trying to write incoming data directly to the slower TLC/QLC NAND while also shuffling data internally from the cache. It's like the express lane backing up and forcing traffic onto the slower local roads, all while road crews are trying to move the backed-up cars elsewhere.

The result? A significant drop in write speed. This lower speed is often referred to as the "direct-to-TLC" or "direct-to-QLC" speed (or more generally, the "folded" speed), representing the performance after the cache is exhausted.

Maintaining Momentum: Why Some Drives Perform Better Under Load

This is where higher-end SSDs often differentiate themselves. While nearly all consumer TLC/QLC drives use SLC caching, some handle the post-cache scenario much more gracefully:

- Larger/Smarter Caches: Drives with larger static caches or more effective dynamic cache management can sustain burst speeds for longer.

- Powerful Controllers: The SSD controller is the drive's brain. More sophisticated controllers can manage the data shuffling (from cache to main NAND) more efficiently, minimizing the performance hit when writing directly to TLC/QLC.

- Higher Quality NAND: The underlying speed and characteristics of the TLC or QLC NAND itself play a role. Better NAND might offer higher native write speeds even without the SLC boost.

- Optimized Firmware: The drive's internal software (firmware) dictates how the cache is managed and how efficiently data is written post-cache.

Some premium drives might achieve direct-to-TLC write speeds that are still quite high – perhaps several hundred MB/s or even over 1 GB/s – while budget drives might slow to a crawl, sometimes even below hard drive speeds, once their cache is saturated.

What Does This Mean for You? Look Beyond the Peak

If your typical usage involves mostly small, quick operations, the peak burst speed enabled by the SLC cache is what you'll experience most often, and most modern SSDs feel incredibly responsive.

However, if you frequently perform tasks involving large, sustained writes:

- Copying huge media files

- Video editing and rendering

- Installing massive games

- Writing large backups

Then the sustained write performance (the speed after the cache fills) becomes a much more important metric. When looking at SSD reviews, pay attention to benchmarks that show performance over the duration of a large file copy, not just the peak number. This direct-to-native-NAND speed gives a truer picture of how the drive will handle heavy lifting.

The Takeaway: SLC caching is a clever technology that makes fast, affordable SSDs possible. But for demanding users, the real test of a drive often lies not just in its initial burst of speed, but in how well it maintains its composure and performance when the pressure is on and the cache runs out.